Have you noticed an influx of indexed pages in your Google Search Console recently? You’re not alone, especially if your site runs on Shopify. It’s most likely a bot spam attack which has been exploiting a Shopify vulnerability in the functionality of the site search query (not a hack!), which has been causing issues to thousands of websites in recent months. This article will explain why they may be appearing, show you how to identify if it is affecting your site, and provide several solutions to rectify the issue to ensure your SEO is unaffected.

Is my website hacked? No – this is a bot attack via your site search query URLs

First reports of this Spam attack date back to July 2022, however majority of reports, as well as my own personal accounts have started to appear as of late 2022 (December).

This Spam attack seems to be a Shopify vulnerability – many people have reached out to Shopify support only to get mixed answers such as:

- ‘reach out to your developer’

- ‘it’s a Google issue’

- someone has manually created the pages “in part by using the ‘Vendor’ field to create a URL”

- “disavow these backlinks from Google” – which doesn’t really make sense considering they aren’t external backlinks. However you should use a variety of tools to check if your site has had an influx of backlinks pointing to the spam URLs on your site. I have not seen this personally as of yet, but know it is a tactic that has been used by spammers before

- “injected by a third party app”

Update – there have been reports of this occurring on Magento sites as well.

The attack is utilising vendor query URLs to spam thousands of fake search queries on websites, which then dynamically create URLs matching those queries. For example if you were to test one of these queries you could type www.testwebsite.com.au/collections/vendors?=test, and see what pops up. Generally, you should see either a 404 page or a ‘no results found’ type of page, as makes sense.

The ‘?q=’ in the URL represents a search query string which can be placed by users or in this case bots. The page does not ‘technically’ exist independently on the website itself, but it exists as a search result on the site, which Google can then pick up and index in it’s search results.

On a manual Google search for the text appearing in one of the Spam URLs, there appears to be more than 13 million affected pages currently indexed by Google – and that is for just one of the many reoccurring text phrases.

Here is what one of these Spam pages may look like (header blanked out):

Why people use bots to do this

There are several reasons why people may create bulk malicious Spam queries through the use of bots. The first of which is that it’s a negative-SEO tactic used to attack or de-rank competing sites. Given that this issue has been so widely reported across hundreds if not thousands of websites, this is more than likely not the case. The likely reason for this particular attack is the promotion of the website/s being seen in the dynamically generated URLs. This is to help promote whatever the attacker is trying to promote via the generated query page – this could be a website URL they are promoting, or something like a product title.

Why this may be a problem for your site

If the query URLs that are appearing in Google Search Console contain a ‘+’ then there is a good chance there is nothing to worry about, as the default robots.txt file should contain a disallow line for /*/collections/*+*. Generally Shopify stores do not have by default the /vendors? path set to disallow in robots.txt, meaning there is a good chance that these Spam query URLs are being picked up and indexed by Google. There have been reports where only a handful of these URLs are being indexed, and other reports of sites having thousands, or even millions being indexed.

Spam URLs flooding a site’s index is obviously not an ideal scenario for SEO.

How to check if this has affected your site

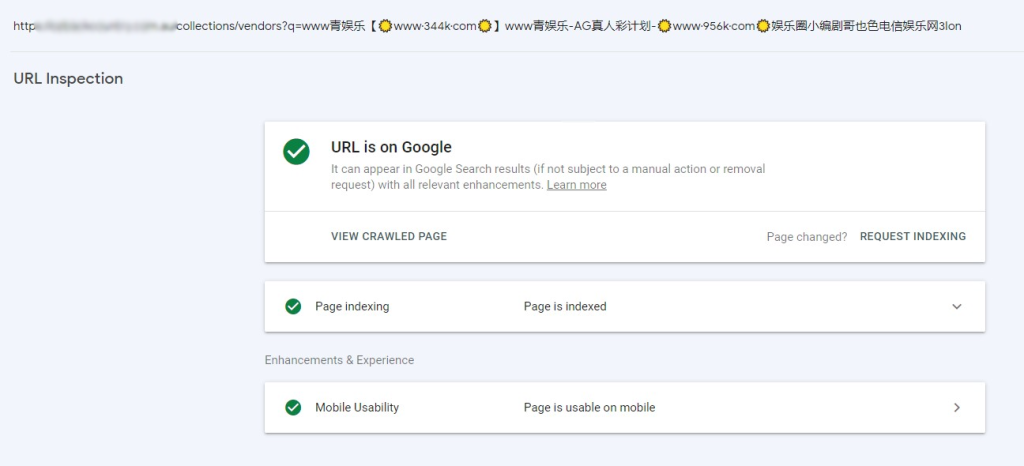

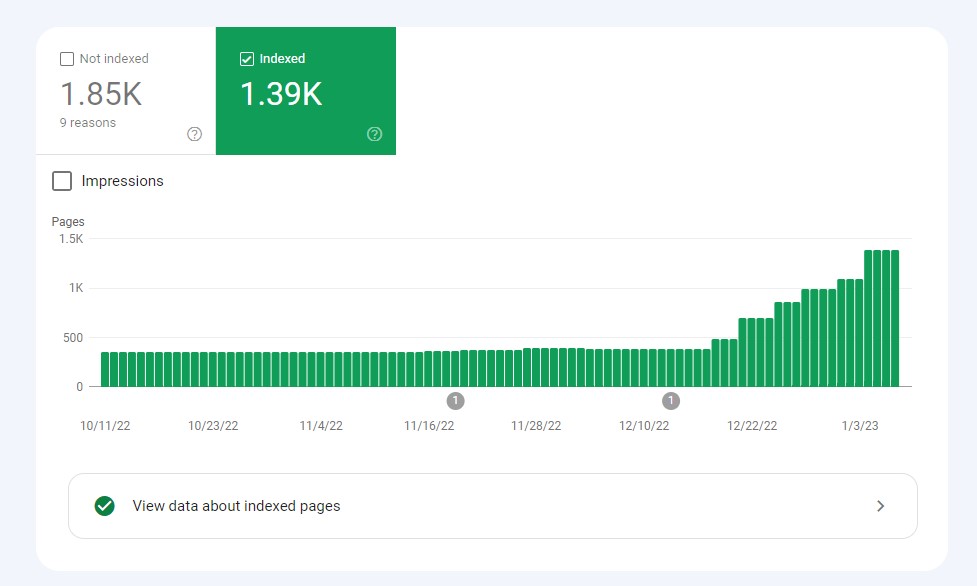

An easy check for site owners is to jump into Google Search Console and visit the Pages -> Indexed report. Check for an abnormal spike in Indexed pages, like this:

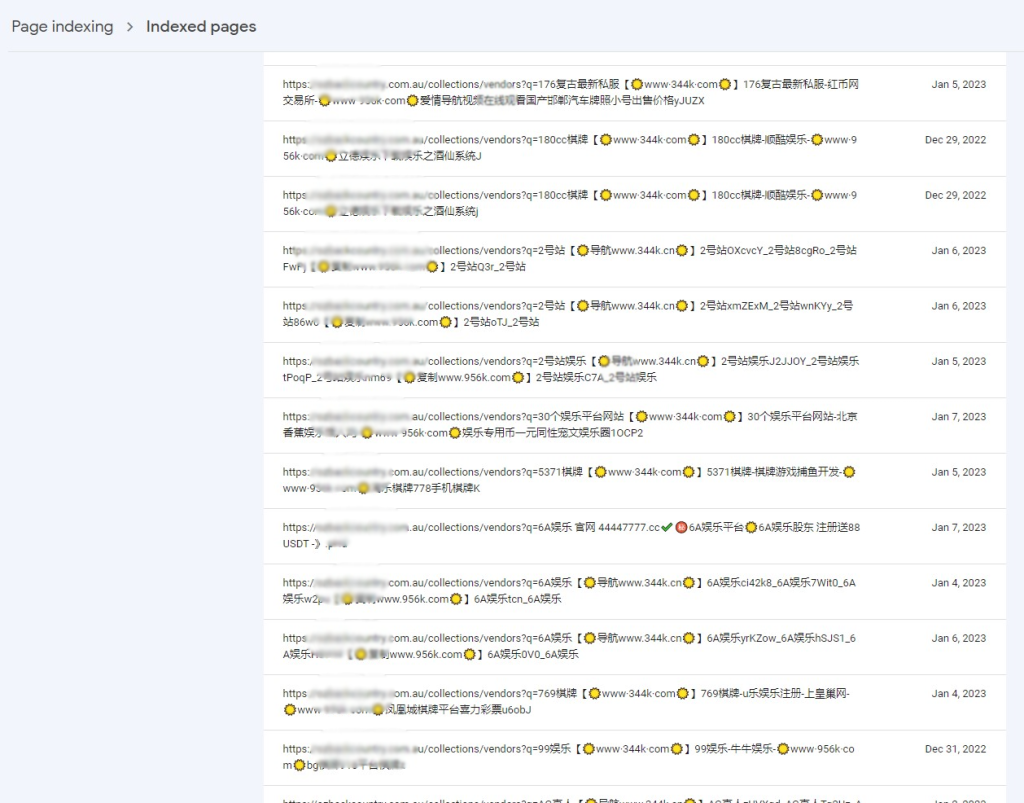

Head into ‘View data about indexed pages’, and if you see any obvious stand out URLs that appear to be Spam rather than a regular URL on your site like below, then your site has been affected.

SEO Solution Options

While there is a chance that if this is happening on a small scale on your site then Google will ignore these URLs and simply count them as ‘Crawled – currently not indexed’ or ‘Discovered – currently not indexed’, and if this is the case then your SEO may not be affected. In cases where larger numbers of these URLs are being indexed by Google, may cause more of a considerable issue for your SEO.

The first thing to check is your Google Search Console indexed pages report to see if the issue is present on your site. The second thing to do is quickly check your robots.txt file to see if you are already disallowing Google from indexing any /vendors? URL paths.

If not, you may want to follow one or several of the below options for cleaning your index of this Spam.

1. Add ‘noindex’ to the meta robots tag on any and all /collections/vendors? URLs:

This solution will noindex any and all Vendor URLs from your site. This may not be desirable if you have active Vendor URLs which are supposed to be indexed on the site, and driving valuable traffic. Even though these auto-generated pages are not uniquely editable (SEO settings, content etc.), they still may be getting indexed by Google, and driving traffic (and even sales) to your site. You can do a quick page search for these URLs in Search Console or GA to double check.

Here is the code to be placed in your theme.liquid file for this solution:

{% if template contains “collection” and collection.handle == “vendors” %}

<meta name=”robots” content=”noindex, follow”>

{% endif %}

2. Add ‘noindex’ to the meta robots tag on any /collections/vendors? URLs where products = 0

A more targeted approach, where if you have found in the above point that you have /vendors? URLs driving traffic and/or sales to your site, and you need to keep those URLs indexed, this may be a better solution. This solution will add a ‘noindex’ tag to any Vendors URLs where products = 0. This means that your ‘actual’ Vendor URLs where your products are being displayed, will remain indexed, but the Spam query URLs being generated which contains ‘no results’ (or products = 0), will have ‘noindex’ added to the page’s meta robots tag.

Here is the code to be placed in your theme.liquid file for this solution:

{%- if request.path == ‘/collections/vendors’ and collection.all_products_count == 0 -%}

<meta name=”robots” content=”noindex”>

{%- endif -%}

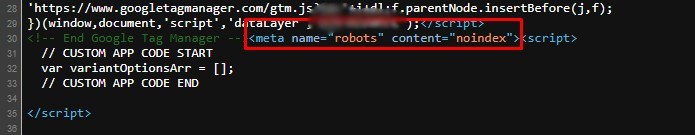

You should see this if you check the source code of one of the Spam URLs:

3. Disallow crawling of Vendor URLs via robots.txt

The next time Google crawls your site, Google’s bot will find a rule set in your robots.txt to not allow the crawling of /collections/vendors?q= URL paths.

Here is the code to be placed in your robots.txt file for this solution (under User-agent: *):

Disallow:*/vendors?q=*

4. Use Google Search Console ‘Indexing -> Removals’ tool to request removal of Spam URLs created

*A warning to use this tool with caution – please consult someone with considerable SEO experience before trying this*

The Temporary Remove URL under Removals in Google Search Console, will “Block URL(s) from Google Search results for about six months and clear current snippet and cached version (they’ll be regenerated after the next crawl). For permanent removal, either block pages from indexing or remove them from the site.”

Here, you can “Remove all URLs with this prefix”, and submit the following:

https://yourdomain.com/collections/vendors?q=

Again, i cannot stress enough that this should be used with caution, and to please consult an expert before doing this.

Google will process your request, and remove any URLs which follow the prefix you have specific from it’s index.

Google does specify that to make the removal permanent, this should only be one step in the whole process. Google also suggests to; ‘Remove or update the content on your site’ – which cannot technically be done in this case since the content does not exist on the site itself; ‘Block access to the content, for example by requiring a password’ – not an option here; ‘Indicate that the page should not be indexed using the noindex meta tag’ – see Points 1 and 2 above.

5. Send any empty (‘no results found’) results pages to a 404 page

Set category and search pages without results to 404. Please speak to your developer about implementing this.

Example – https://skims.com/collections/vendors?q=Buy%20FUT%2023%20coins%2C%20Cheap%20FIFA%2023%20coins%20for%20sale%2C%20Visit%20Cheapfifa23coins.com%2C%20PS4%2FPS5%2FXBOX%20ONE%2FPC%2030%25%20OFF%20code%3AFIFA2023%7C%20lovely%20customer%20service%20if%20you%20want%20to%20buy%20%20fifa%2023%20coins%20ps4%20in%20AUSTRIA%21..%20%208qit

6. Prevent the query being printed in the title tag

If the query being printed by the spam bot can no longer be seen on the search results page on the site, then the incentive for the attackers is essentially lost. This solution forces an empty results page to show text OTHER than the spam text. In the below code it will show ‘404 Not Found’, and the SEO title on the page.

{%- if request.path == ‘/collections/vendors’ and collection.all_products_count == 0 %}

<title>404 Not Found</title>

{%- else %}

<title>{{ seo_title }}</title>

{% endif %}

So far, I have used a combination of 2. and 4. above to successfully clean several sites of this Spam attack.

Please reach out if you have seen this happening to one of your sites, and let me know how you managed to tackle the issue!

**UPDATE – Shopify is well aware of the issue

Many of the solutions above were found here.

If you’d like more information, I am an SEO freelancer in Melbourne available for hire – get in contact with me today and i will respond to any questions or work enquiry.